CS & AI Student | Aspiring Software Developer | Passion for ML and Wolfram

In my (totally unbiased) opinion, artificial intelligence is the most fascinating field in all of science. Yet ironically, I think that the name "artificial intelligence" fails to capture what is truly interesting about the field. Even though the concept of AI has been around for decades, experts still fundamentally disagree on what artificial intelligence actually means. Personally, I view AI not just as a subfield of computer science, but as a broader study of intelligence itself, both natural and artificial, and specifically how complex, intelligent behaviour can emerge from simple rules or systems. This interpretation is deliberately broader than most. It includes neural networks and machine learning, but also biological evolution, physical systems, and so forth (if you can name it, it's intelligent). In my view, this breadth reflects the true essence of AI and cleanly encapsulates all of its innumerable subfields. You might reasonably argue that this definition is too vague. If we follow this logic, could we call almost anything (for example, traffic lights, binoculars, waterfalls) as examples of intelligence? This is a completely fair critique and I welcome you to discuss this with me further if this type of thing interests you. But to define AI properly, we must first define intelligence, and that is where things get difficult.

To define intelligence, we would need a way to classify everything in the universe as either "intelligent" or "not intelligent." But making such a clean division is (in my opinion) impossible. Let us take large language models (LLMs) as an example. Most people agree they exhibit some form of intelligence, but fundamentally, they are just massive mathematical functions. So, if these functions are intelligent, are all mathematical functions intelligent? Probably not. Perhaps it is a matter of size or complexity, but then where exactly is the threshold? At what point does a function, or a system, become “intelligent”? Any cutoff point we choose would be arbitrary, a line drawn simply to satisfy our human desire to categorise things neatly. This arbitrarity suggests that our attempts to define intelligence in terms of strict boundaries is not only functionally impossible, but functionally meaningless too.

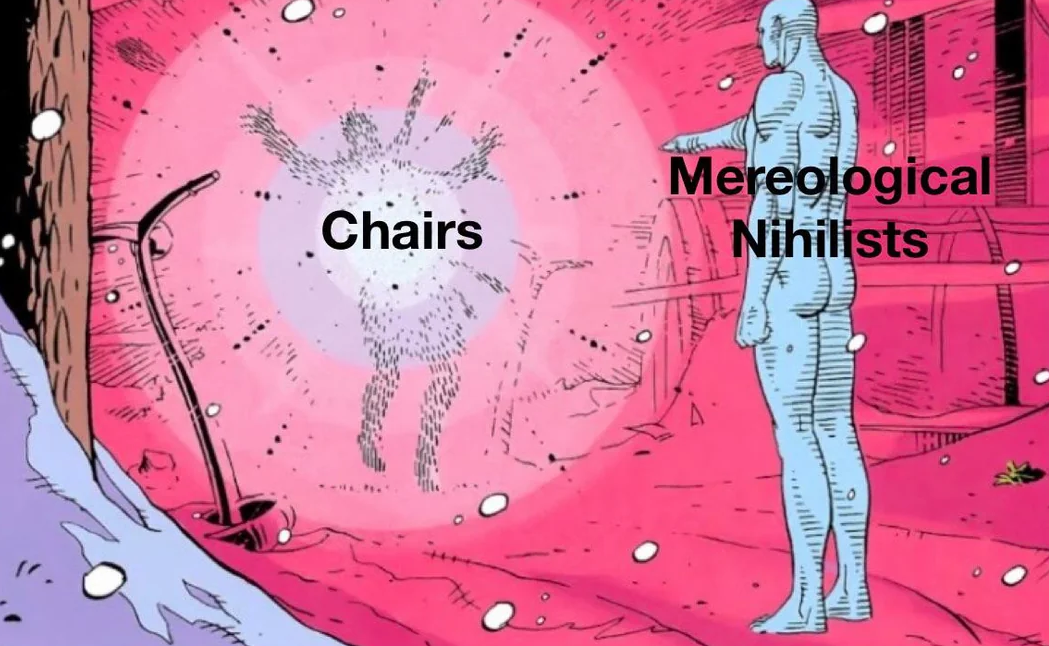

I hold the philosophical view of mereological nihilism, which suggests that objects like tables, trees, or even humans do not really “exist” as wholes. Only the smallest physical parts of the universe (like particles or fields) exist, and everything else is just a way of grouping them for our convenience. From this perspective, intelligence is not a property that belongs to specific objects (like people, robots, or animals). Instead, it is a property of the universe as a whole, expressed through different patterns and phenomena. For example, is a large language model intelligent when it answers your question? Is it less intelligent than a human writing an essay, or more intelligent than a tree evolving to survive in a harsh climate? From a universal standpoint, these are all just different manifestations of complex behaviour emerging from simple rules. The particles in a waterfall change constantly, yet we still call it “a waterfall.” It is not a fixed object, but a phenomenon. Following this logic, intelligence is not a property of any object, but rather the emergent property of the universe acting on itself.

If intelligence is something that can emerge from simple systems, and if there is no clear line between “intelligent” and “non-intelligent”, then we are left with two options:

As I have hopeful convinced you, artificial intelligence is not just about machines mimicking humans. It is about understanding the very nature of intelligent behaviour, how it arises, where it shows up, and whether it even makes sense to draw boundaries around it. Trying to rigidly define intelligence may be more about satisfying human psychology than uncovering objective truth. People often overlook the fact that AI is a study of ourselves just as much as it is a study of computer systems. Neural networks are intelligent agents, but so are humans and all living things, even society as a whole can be considered as a single self-sustaining agent. To me, the most interesting questions are not, "can we do this or that with AI?", eventually we will probably do most things with AI. Rather, we should ask, what does this say about us as individuals, as a species, and how will we be changed because of it? No technological advancement is ever purely technological; people change technology and technology changes people. We will be changed, that is inevitable, but we have the capacity to let AI be a change for better or for worse. And so to finish this thought, I study AI so that this change is a positive one, for all intelligent agents (toasters and fleshlings alike).

As my third-year individual project (the equivalent of a Bachelor's dissertation), I spent the best part of 8 months conducting a research study with the aim of developing an RL framework that can effectively and practically control traffic within a road network. I named my project "Building a Robust and Scalable Traffic Control System using Reinforcement Learning", and came out the other side with a 65 page report, plenty of research experience, a grade of 79%, and a receding hairline. For a bit of context, my analysis of the current scientific literature demonstrated that RL has been successfully applied to optimise traffic control, however I could find no evidence of RL being used (or even considered for real world use). After some early prototyping, I quickly found out why: it is incredibly difficult to train an RL agent to coordinate traffic dynamically and efficiently whilst also behaving safely without destabilising, a fact that has been largely ignored by current research which is still hyperfocused on pure efficiency. For this reason, I tasked myself with developing an RL framework (that is, the type of agent and the features of the environment: state, action and reward) that produces an agent that is both robust (consistent, stable and safe) whilst being scalable (being able to function in any road network). One of my main insights was that I demonstrated how to create a perfectly efficient traffic control system using a fixed duration system any why such a system is practically impossible (demonstrating that safety should be the key concern for system designers, not efficiency). I then planned, set up and conducted a series of rigorous experiments to exhaustively test combinations of features, finally arriving at a definitely superior (and most importantly, practical) system for robust and scalable behaviour engineering. Although conclusive, these results are far too complicated to explain here, so if you are planning on doing a similar project and need help with picking an RL algorithm or a set of features for your RL environment, please contact me! Overall, this project was very rewarding, it is crazy to think about just how far my abilities as a programmer and AI engineer have come since the beginning. That being said, there are already several things that I would do differently and I am looking forward to implementing these next academic year for my Master's thesis. My entire codebase for the project can be found at: https://github.com/Tom-Pecher/Third-Year-Project. If you are interested in this research or would like to see the dissertation itself, please feel free to reach out!

As part of a group project (and our collective introduction to RL), we conducted an experiment in which we implemented a variaty of RL-based methods and set them to train on the OpenAI Gym bipedal walker environment. Specifically, we pretrained the models on the base environment (a flat surface) and then tested the best performing models on the "hardcore" environment (a surface with random bumps and holes). This is a common RL problem and is widely considered to be quite difficult. Never the less we managed to train a Soft Actor-Critic (SAC) and a SUNRISE agent to solve the hardcore environment (reach 300+ reward). More impressive however, the SUNRISE agent managed to converge to this optimal strategy five times faster than existing models we could find. This project was great fun and has made me go down an RL rabbit hole that I am still exploring in my individual project. Many thanks to my group members for their hard work and dedication that made this project possible (they are all great programmers and great people so check out their LinkedIns here: Marilyn D'Costa, Ptolemy Morris, Dhru Randeria, George Rawlinson).

As part of the the VIP study "Creating immersive training experiences in VR", we intended to create a VR simulation for training users to be "effective bystanders" when witnessing sexual harassment. As lead developer, my role was to create a system that would classify the user's speech towards the perpetrator into one of a set of predetermined actions (such as distract the perpetrator etc.). Using Tensorflow, I implemented and fine-tuned an LSTM model that managed to reach 97% accuracy on test data. The system is currently in the testing phase and I hope my contribution will help the study reach its goals.

As part of the "Experimental Systems Project" group module, we created a system that generates subtitles for any video in real-time. A key goal with our system was to ensure that the underlying model would be able to perform even in noisy scenarios where the audio quality was poor. We achieved this by dynamically switching between models to best adapt to the audio conditions. The system was a success, and we were able to demonstrate it to our peers and lecturers.

Since it is currently the holidays, the only work I am doing is making half-baked GitHub repositories at 3am and forgetting about them the next day.

Jokes aside, I am spending this time of relative calm to prepare for my Master's thesis, which will be NLP-based. I plan to create specialised LLMs that can understand, summarise and answer questions on scientific literature. If you think that I am doing this just to make writing my thesis easier... YES, however that is not may main reason. In truth, I think that there is so much incredible scientific progress happening all around us, however even though our technology is improving exponentially, our ability to perceive it is not. A century ago, being an academic meant being a master of your field and having a broad knowledge of everything, but now with so much progress being made, we count ourselves lucky if we can comprehend even a tiny sliver of it. My goal with this final year project will be not just to advance the field of NLP (although that is the main part of it), but to advance all of science by making its lifeblood, the unrestricted sharing of information, accessible to everyone. This is all very pretentious I know, but as someone who loves learning and self-improvement, it deeply frustrates me to see how much progress is lost and time wasted due to the sheer volume of information that it resides within.

Aside from this, I am also working on:

When I am less busy, I hope to delve into some of these potential avenues: